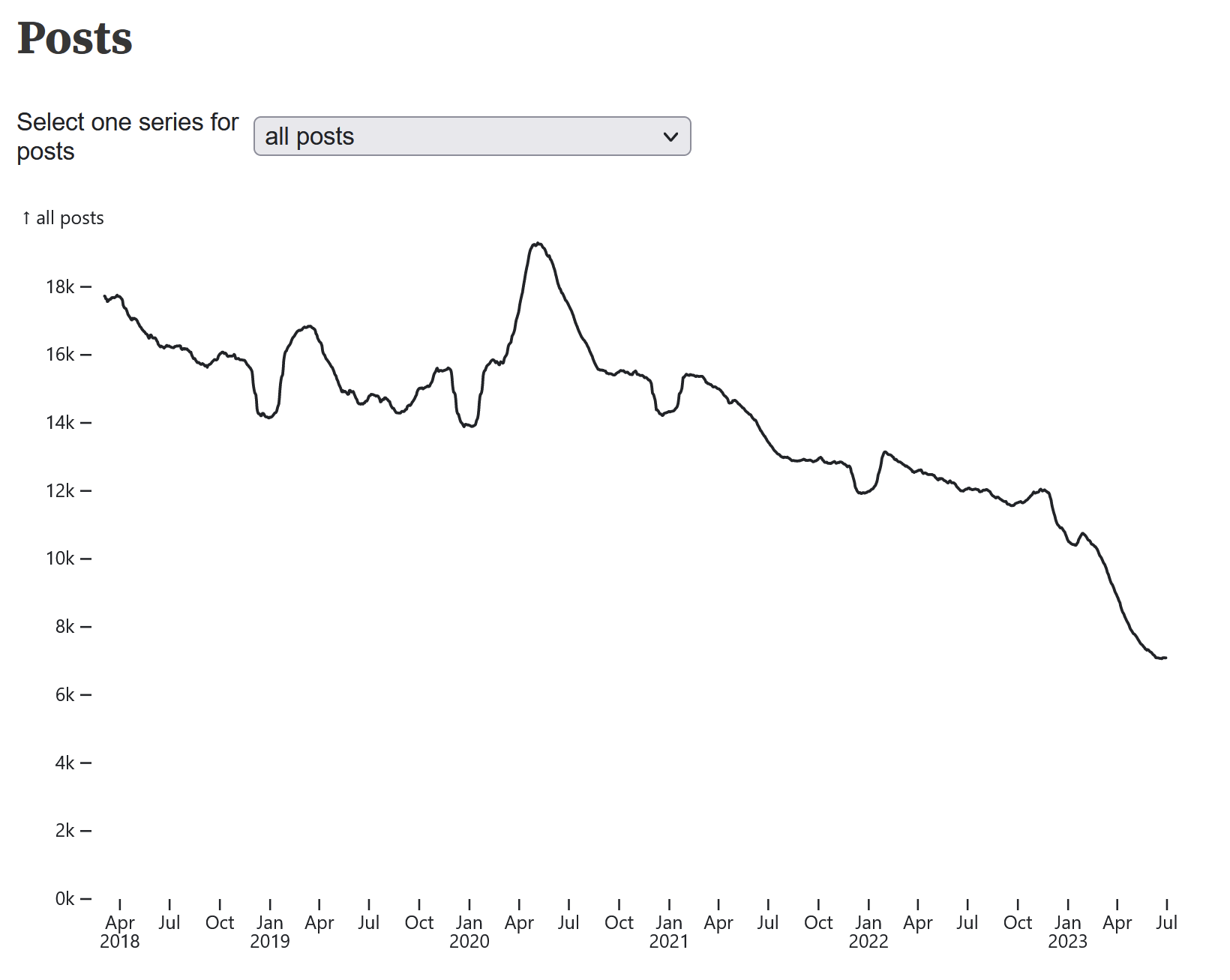

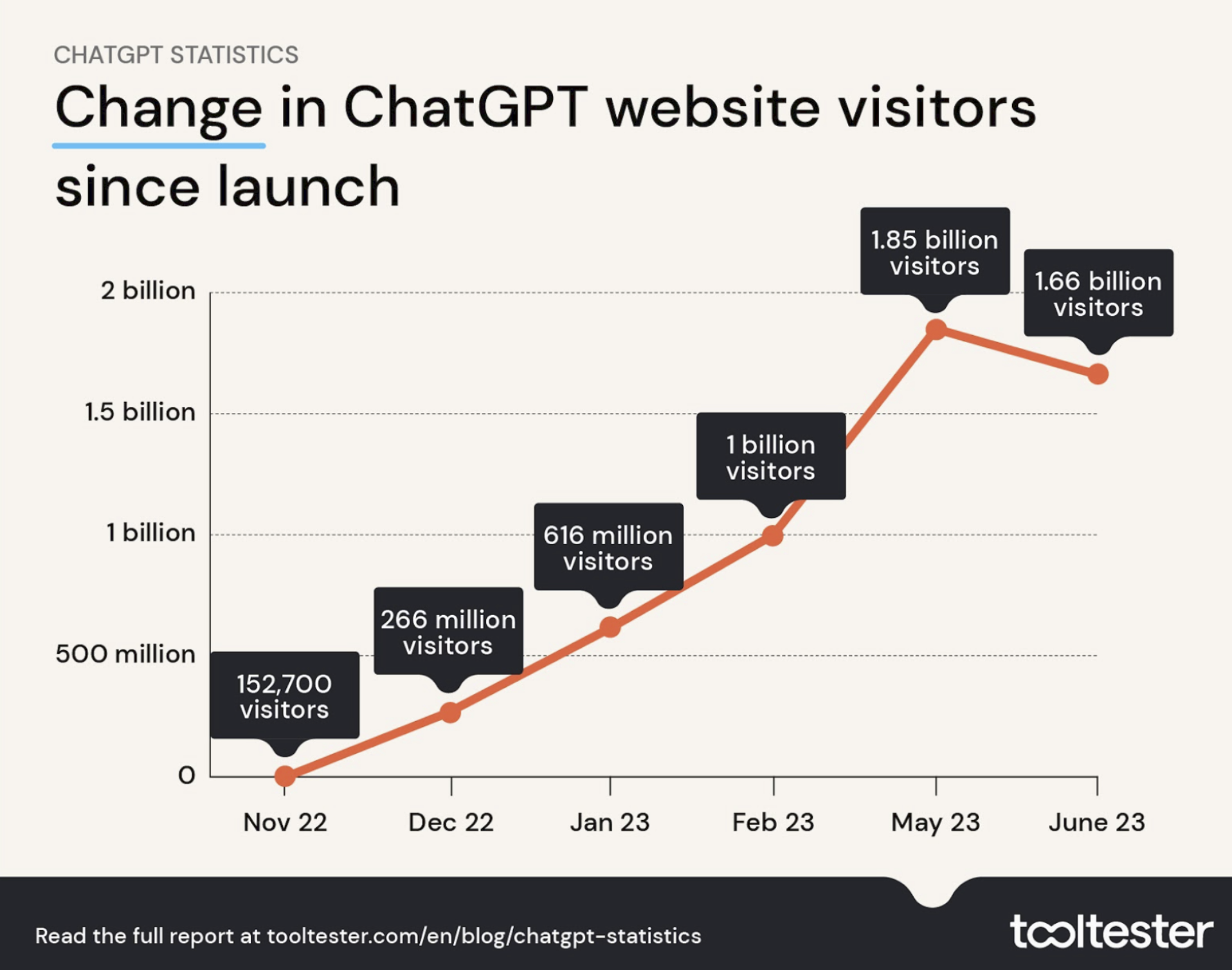

I like the experience using Copilot and GPT much better than browsing SO, but this is what worries me in the long-term though:

This issue goes beyond the survival of Stack Overflow. All AI models need a steady flow of quality human data to train on. Without that, they'll be left to rely on machine-generated content, and researchers have found that this leads to worse performance. There's an ominous name for this: model collapse.

Without this incredible knowledge sharing and curated feedback, in an environment that constantly changes with new libraries, languages, and best practices, these LLMs are doomed. I think solving this might be Stack Overflow's way out.