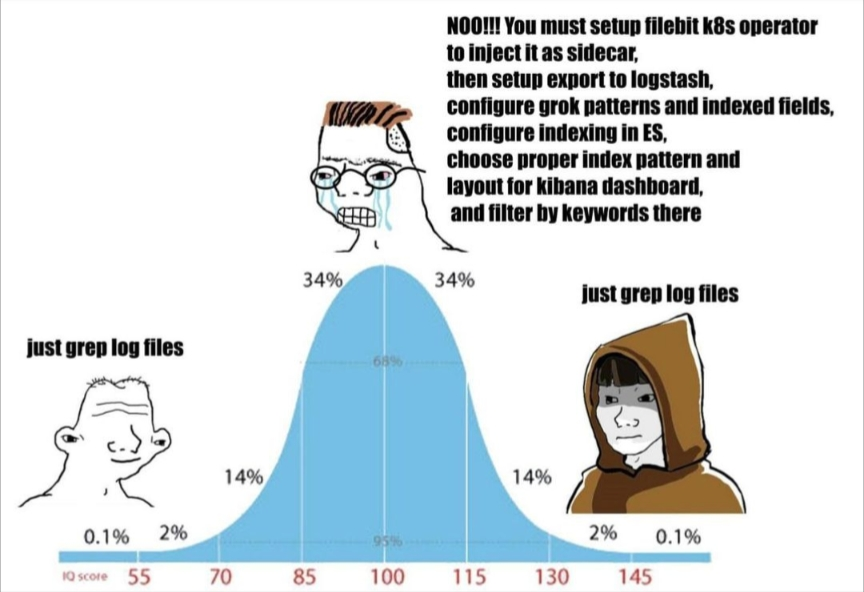

I've only ever grepped log files. 9 years into my career now so not sure which side of the spectrum I'm on (i'm definitely on the spectrum)

I'm grepping log since the 80s/90s, still do

I have scripts to ssh and grep my logs across multiple VMs. Way faster than our crap Splunk instance. Pipe that shit through awk and I can find anything!

Here we are all on the spectrum my friend.

I'm running Grafana Loki for my company now and I'll never go back to anything else. Loki acts like grep, is blazing fast and low maintenance. If it sounds like magic it kind is.

I saw this post and genuinely thought one of my teammates wrote it.

I had to manage an ELK stack and it was a full time job when we were supposed to be focusing on other important SRE work.

Then we switched to Loki + Grafana and it's been amazing. Loki is literally k8s wide grep by default but then has an amazing query language for filtering and transforming logs into tables or even doing Prometheus style queries on top of a log query which gives you a graph.

Managing Loki is super simple because it makes the trade off of not indexing anything other than the kubernetes labels, which are always going to be the same regardless of the app. And retention is just a breeze since all the data is stored in a bucket and not on the cluster.

Sorry for gushing about Loki but I genuinely was that rage wojak before we switched. I am so much happier now.

We do Grafana + Prometheus for most of our clients but I think that adding Loki into the mix might be necessary. The amount of clients that are missing basic events like "you've run out of disk space...two days ago", is too damn high.

Sounds like you need an alert/monitoring system and not a logging system. Something like nagios where you immediately get an alert if something is past its limits, and where you don't have to rely on logging.

Preaching to the choir. They hire use to performance tune their app but then their IT staff manges to not notice the most basic things.

Good luck connecting to each of the 36 pods and grepping the file over and over again

for X in $(seq -f host%02g 1 9); do echo $X; ssh -q $X “grep the shit”; done

:)

But yeah fair, I do actually use a big data stack for log monitoring and searching… it’s just way more usable haha

Just write a bash script to loop over them.

You can run the logs command against a label so it will match all 36 pods

Stern has been around for ever. You could also just use a shared label selector with kubectl logs and then grep from there. You make it sound difficult if not impossible, but it's not. Combine it with egrep and you can pretty much do anything you want right there on the CLI

I don't know how k8s works; but if there is a way to execute just one command in a container and then exit out of it like chroot; wouldn't it be possible to just use xargs with a list of the container names?

yeah, just use kubectl and pipe stuff around with bash to make it work, pretty easy

This is what I was thinking. And you can't really graph out things over time on a graph which is really critical for a lot of workflows.

I get that Splunk and Elastic or unwieldy beasts that take way too much maintenance for what they provide for many orgs but to think grep is replacement is kinda crazy.

Since you are talking about pods, you are obviously emitting all your logs on stdout and stderr, and you have of course also labeled your pods nicely, so grepping all 36 gods is as easy as kubectl logs -l <label-key>=<label-value> | grep <search-term>

Let me introduce you to syslogd.

But well, it's probably overkill, and you almost certainly just need to log on a shared volume.

That's why tmux has synchronize-panes!

...or as I've come to call it grep+linux

What the fuck is center even talking about? Is that shit a thing people do?

Yeah, ofc it is.

I'm working in a system that generates 750 MILLION non-debug log messages a day (And this isn't even as many as others).

Good luck grepping that, or making heads or tails of what you need.

We put a lot of work into making the process of digging through logs easier. The absolute minimum we can do it dump it into elastic so it's available in Kibana.

Similarly, in a K8 env you need to get logs off of your pods, ASAP, because pods are transient, disposable. There is no guarantee that a particular pod will live long enough to have introspectable logs on that particular instance (of course there is some log aggregation available in your environment that you could grep. But they actually usefulness of it is questionable especially if you don't know what you need to grep for).

These are dozens, hundreds, more problems that crop up as you scale the number of systems and people working on those systems.

This write-up can be the next KRAZAM skit

A good chunk of it is relating to the elastic search stack, yeah it's a thing people do.

My life got so much better after we abandoned elasticsearch at work

The middle thing is not what normies do, it is what enterprises do, because they have other needs than just knowing 'error where?'

Do folks still use logstash here? Filebeat and ES gets you pretty far. I've never been deep in ops land though.

Why grep log files when I can instead force corporate to pay a fuck ton of money for a Splunk license.

It's such an insane amount of money

I used to work for a very very large company and there, a team of 9 people and I's entire jobs was ensuring that the shitty qradar stack kept running (it did not want to do so). I would like to make abundantly clear that our job was not to use this stack at all, simply to keep it running. Using it was another team's job.

Amazing. Depressing, but amazing.

remember this shit when people talk about how we can't just give people money for doing nothing

we're already just inventing problems for people to fix so we can justify paying them

As someone who used to troubleshoot an extremely complex system for my day job, I can say I've worked my way across the entire bell curve.

grep -nr <pattern>, thank me later.

I’d also add -H when grep’ing multiple files and --color if your terminal supports it.

Good tracing & monitoring means you should basically never need to look at logs.

Pipe them all into a dumb S3 bucket with less than a week retention and grep away for that one time out of 1000 when you didn't put enough info on the trace or fire enough metrics. Remove redundant logs that are covered by traces and metrics to keep costs down (or at least drop them to debug log level and only store info & up if they're helpful during local dev).

What a nice world you must live in where all your code is perfectly clean, documented and properly tracked.

And not subject to compliance based retention standards

Well I didn't say anything about perfectly clean, but I agree, it's very nice to work on my current projects which we've set up our observability to modern standards when compared to any of the log vomiting services I've worked on in the past.

Obviously easier to start with everything set up nicely in a Greenfield project, but don't let perfect be the enemy of good—iterative improvements on badly designed observability nearly always pays off.

"Log" is the name of the place you write your tracing information into.

Please excuse my ignorance, but what is grep, what are the do's and dont's of logging and why are people here talking about having an entire team maintain some pipeline just to handle logs?

It's a command line tool which filters for all lines containing the query. So something like

cat log.txt | grep Error5

Would output only lines containing Error5

You can just do

grep Error5 log.txtIn the back of my mind I know this is there, but the cat | grep pattern is just muscle memory at this point

I've been 'told off' so many times by the internet for my cat and grep combos that I still do it, then I remove the cat, it still works, and I feel better. shrug

Just remember that if you aren't actually concatenating files, cat is always unnecessary.

Programmer Humor

Post funny things about programming here! (Or just rant about your favourite programming language.)

Rules:

- Posts must be relevant to programming, programmers, or computer science.

- No NSFW content.

- Jokes must be in good taste. No hate speech, bigotry, etc.